The Silicon Valley-based tech giant said the matching of photos would be "powered by a cryptographic technology" to determine "if there is a match without revealing the result," unless the image was found to contain depictions of child sexual abuse.Īpple will report such images to the National Center for Missing and Exploited Children, which works with police, according to a statement by the company. However, several digital rights organizations say the tweaks to Apple's operating systems create a potential "backdoor" into gadgets that could be exploited by governments or other groups.Īpple counters that it will not have direct access to the images and stressed steps it's taken to protect privacy and security.

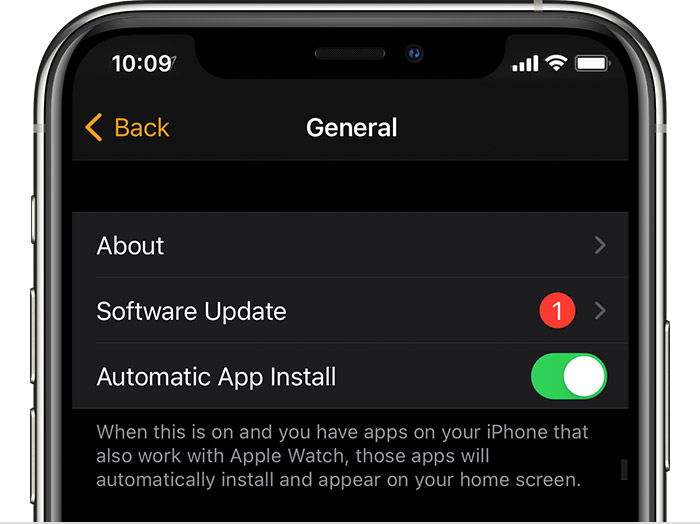

#Apple check for updates software

New technology will allow software powering Apple mobile devices to match abusive photos on a user's phone against a database of known CSAM images provided by child safety organizations, then flag the images as they are uploaded to Apple's online iCloud storage, according to the company. "We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of child sexual abuse material (CSAM)," Apple said in an online post. Apple Thursday said iPhones and iPads will soon start detecting images containing child sexual abuse and reporting them as they are uploaded to its online storage in the United States, a move privacy advocates say raises concerns.

0 kommentar(er)

0 kommentar(er)